Matrix inverse

Categories: matrices

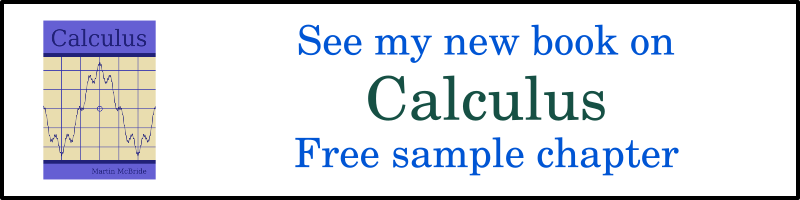

We are familiar with the inverse (or reciprocal) of a number:

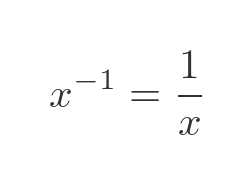

The inverse of a square matrix has similar notation (non-square matrices cannot be inverted):

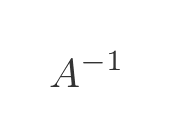

Matrices don't have a divide operation. But we can define the inverse differently:

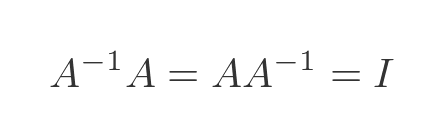

This definition works for matrices too:

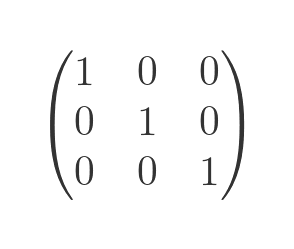

Here I is the identity matrix. This a square matrix with ones along its leading diagonal, and zeros everywhere else, like this 3 by 3 identity matrix:

Existence of the inverse

For a real number x, we know that the inverse only exists if x is not 0. For a matrix, there are 2 conditions. The matrix must be square, and the determinant of the matrix must not be 0.

Applications of inverse matrices

The inverse matrix is useful for solving systems of simultaneous equations. This has applications in many areas including least squares linear regression, finite element analysis, and electrical engineering.

Matrices are used extensively in computer graphics, including 3D graphics for gaming. The inverse matrix reverses a transformation. This has various uses, including inverse kinematics - for example calculating the elbow and shoulder joint angles based on the wrist position as a character moves their hand.

Example - 2 by 2 matrix

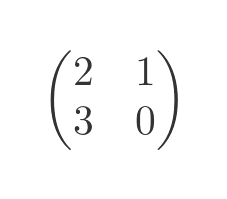

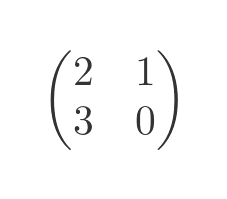

As an example, we will calculate the inverse of this matrix:

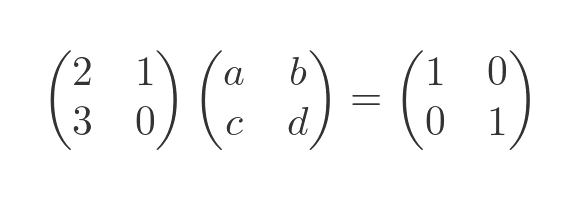

How can we calculate the inverse matrix? Well, we know that the original matrix multiplied by the inverse matrix gives the identity matrix. If we say that the inverse matrix has elements a, b, c, and d, we have this equation:

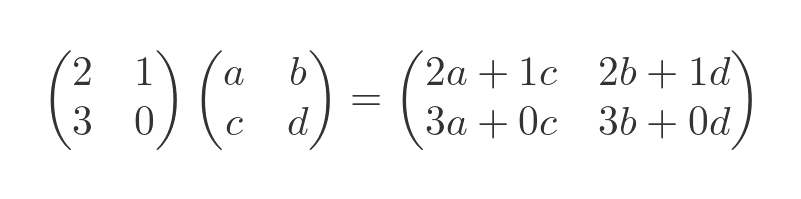

If we multiply the original and inverse, using standard matrix multiplication, we get this:

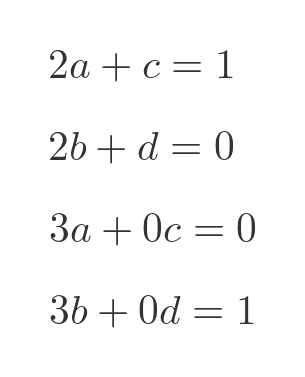

We need to find the values of a to d that make the RHS above equal to the identity matrix. So we need to satisfy these 4 equations:

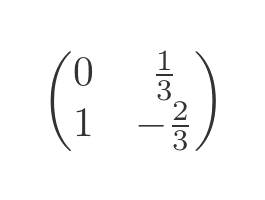

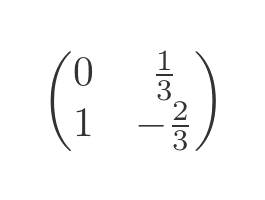

The bottom 2 equations can be solved quite easily, they tell us that a = 0 and b = 1/3. Putting these values back into the top 2 equations gives us c = 1 and d = -2/3. So the inverse matrix is:

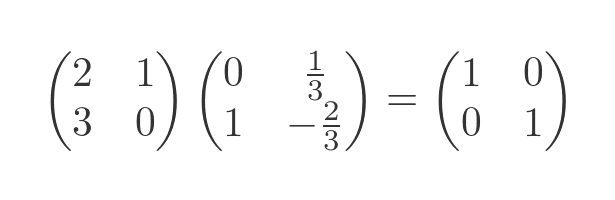

We can easily verify that this is the inverse matrix by simple matrix multiplication:

Reversing a transform

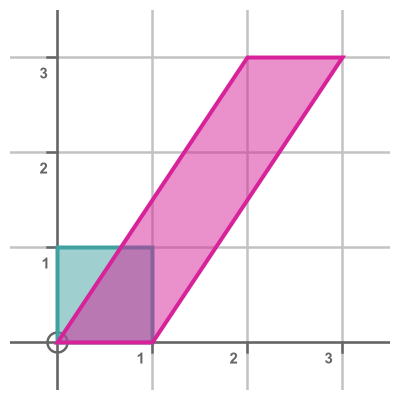

If we apply the previous matrix:

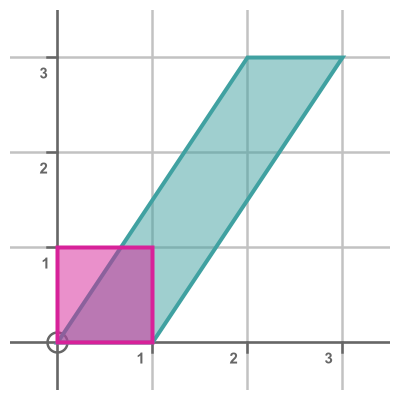

To a unit square, the shape is transformed like this:

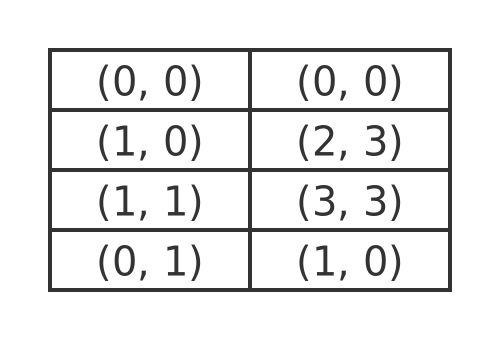

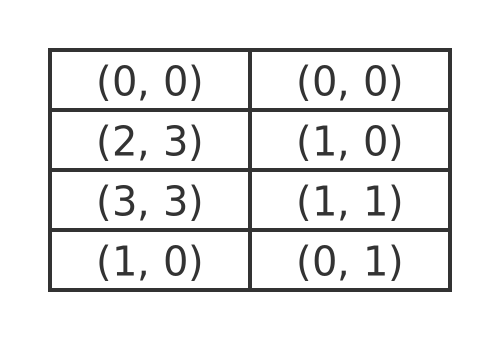

Here is the effect of the transformation on each corner of the shape:

This gives the forward transformation, form the cyan unit square to the magenta parallelogram.

Now if we apply the inverse matrix to the previous shape:

We get back to the original unit square:

Here is the effect of the inverse transform on each corner of the previously transformed shape. This gives the inverse transformation from the cyan parallelogram back to the magenta unit square:

Determinant of inverse matrix

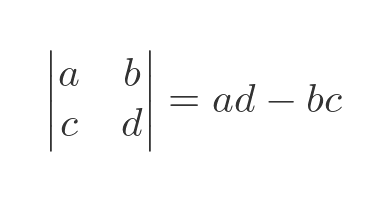

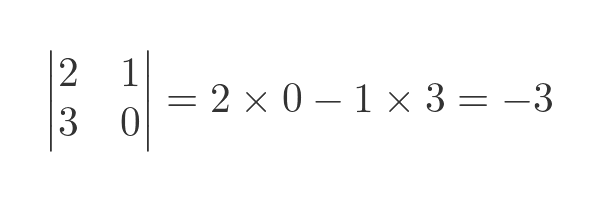

We can find the determinant of our original matrix using the standard formula for a 2 by 2 determinant:

If we apply this formula to the original matrix we get a determinant of -3:

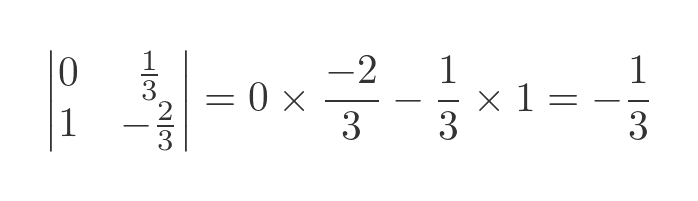

For the inverse matrix, we get a determinant of -1/3:

In general, for an n by n matrix, the determinant of the inverse matrix will be the reciprocal of the determinant of the original matrix. That also explains why it is not possible to find the inverse of a matrix with a zero determinant - it isn't possible to find the reciprocal of the determinant.

Finding the inverse of a general square matrix

We can find the inverse of a 2 by 2 matrix by expanding the equation of the inverse and solving it as a set of 4 simultaneous equations. This is quite easy to do, especially if one of the elements is 0, as in our example.

For a general n by n matrix we need a more systematic approach. Especially as, in this day and age, we are probably going to use a computer to do the calculation.

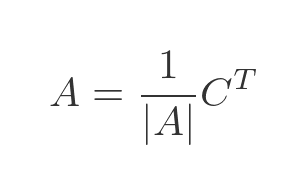

The general equation for the inverse of a square matrix is:

In this equation C is the matrix of cofactors of A. The superscript T indicates that we need to use the transpose of this matrix (that is, we need to flip the matrix about its leading diagonal).

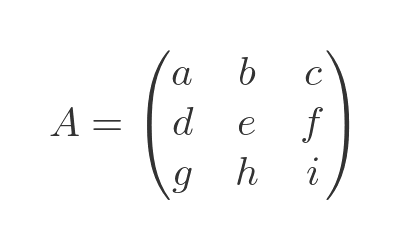

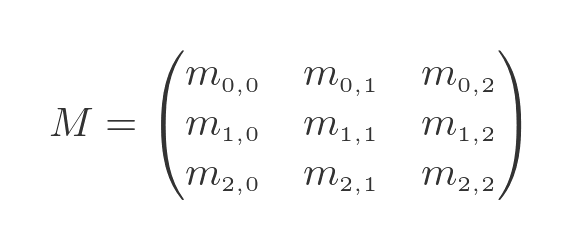

What is the matrix of cofactors? Well to find that we first need to find the matrix of minors, M. This is best explained by example, using this 3 by 3 matrix (although the description here can be easily applied to the n by n case):

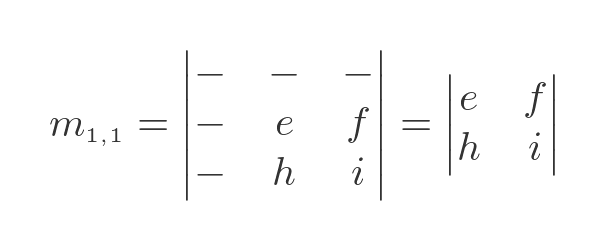

Each element of this matrix has a minor. This is found by removing the row and column of the element from the matrix and finding the determinant of the result. For example, the minor of (1, 1) is:

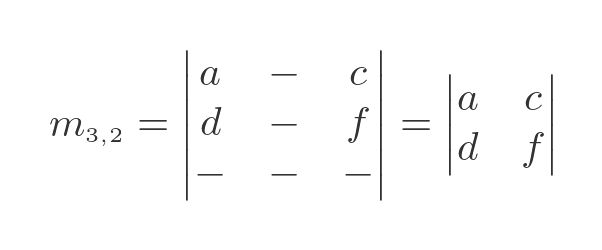

And the minor of (3, 2) is:

The matrix of minors M is a matrix where element (i, j) is equal to the minor (i, j):

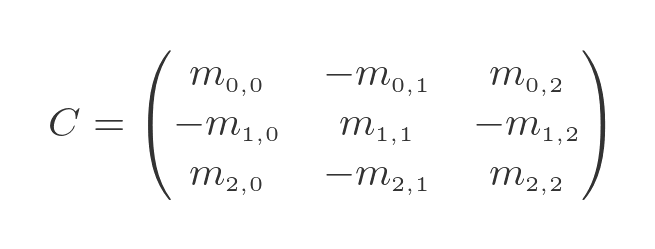

Next, we need to find the cofactor matrix C. This is equal to the minor matrix but with every alternate element negated, like this:

You might recognise this from the article on determinants. The sign of each element is multiplied by either 1 or -1 according to its position, like a chess board. The top left element is always positive.

So, to summarise the process of finding the inverse of a matrix A (for any size n by n):

- Find the minor of each element, which is the determinant of the matrix created by removing the row and column of the current element from A.

- Form a matrix M of all the minors (the minor matrix).

- Form the cofactor matrix C by negating alternate values in the minor matrix.

- Find the inverse of A by dividing transpose of C by the determinant of A.

The transpose of the cofactor matrix C is sometimes called the adjugate matrix.

See also

Join the GraphicMaths Newletter

Sign up using this form to receive an email when new content is added:

Popular tags

adder adjacency matrix alu and gate angle answers area argand diagram binary maths cartesian equation chain rule chord circle cofactor combinations complex modulus complex polygon complex power complex root cosh cosine cosine rule cpu cube decagon demorgans law derivative determinant diagonal directrix dodecagon eigenvalue eigenvector ellipse equilateral triangle euler eulers formula exercises exponent exponential exterior angle first principles flip-flop focus gabriels horn gradient graph hendecagon heptagon hexagon horizontal hyperbola hyperbolic function hyperbolic functions infinity integration by parts integration by substitution interior angle inverse hyperbolic function inverse matrix irrational irregular polygon isosceles trapezium isosceles triangle kite koch curve l system line integral locus maclaurin series major axis matrix matrix algebra mean minor axis n choose r nand gate newton raphson method nonagon nor gate normal normal distribution not gate octagon or gate parabola parallelogram parametric equation pentagon perimeter permutations polar coordinates polynomial power probability probability distribution product rule proof pythagoras proof quadrilateral questions radians radius rectangle regular polygon rhombus root sech segment set set-reset flip-flop sine sine rule sinh sloping lines solving equations solving triangles square standard curves standard deviation star polygon statistics straight line graphs surface of revolution symmetry tangent tanh transformation transformations trapezium triangle turtle graphics variance vertical volume volume of revolution xnor gate xor gate